Imagine yourself in front of a stove. How do you know whether it’s hot? You could appeal to conventional knowledge (with apologies for the oven pun) — “stoves get hot when they are on and I can see that this one is on” (maybe because you see flames or a glowing surface or an indication light); or you could appeal to felt experience — “I touched it, it’s hot.” But you won’t have the same options when it comes to knowing your brain. After all, how do you know your brain … is even there? Maybe the very question has you rolling your eyes and declaring, “of course my brain is there — everyone has a brain, no one could survive without one,” perhaps adding in exasperation, “we know this.” This presumption of shared knowledge might very well include relating neuroscientific facts to your own experiences: “Right now I am remembering what I ate for breakfast, and remembering recent events is impossible without a hippocampus, which is part of the brain!” Nobody would doubt your sanity if you said as much. Nonetheless, proclamations about the brain are fundamentally different from direct experiences of the brain—one’s own brain, that is. Even brain injuries cannot be experienced as coming from the brain! If you’ve had a stroke that makes it effortful to speak or move, surely you may experience effortful speech or movement — but that’s not the same as experiencing the brain itself as impaired. You could even show us a picture of your brain with a little dark mark where the neurologist said the stroke occurred, but such knowledge—photographic evidence corroborated by experts even!—is still at a curious remove from the felt-ness of your own body.

That’s the thing about your experience of your body; it’s yours and yours alone. Elaine Scarry has essayed about this in regard to the strange relationship between pain and certitude:[1] There’s nothing more certain than your own pain in the moment of experiencing it (think of bashing your finger with a hammer), but it’s pretty easy to doubt somebody else’s pain (are they really hurting or are they just acting?). This is not to say that another’s pain is wholly inaccessible to us. We can, of course, adopt an empathetic attitude towards it — hence the very possibility of the expression “I feel your pain” … but that’s an altogether different creature than the certitude of your own in-the-moment discomfort.

In the same fashion, our brains don’t really seem to be an authentic part of our bodies, despite all our knowledge to the contrary. Indeed, we are so enthralled by this strange object that we can think about and dissect and photograph and zap and measure, but never feel. Why? One clue comes from how the word “brain” is used in the everyday world. Here’s an example from a recent New York Times editorial: “In every moment, your brain must figure out your body’s needs for the next moment and execute a plan to fill those needs in advance.”[2] The brain here is talked about as if it were … human, frankly! — it plans, it figures out. A close reading of the quote reveals that not only does the brain “figure out,” but it “must figure out,” as if it were under some kind of moral compunction to do so. But ask yourself, what exactly must the brain plan and figure out … if not us? We are planned, we are figured out. Given the way we talk about it, the brain has become a linguistic substitute for our selves; we invoke it to explain why we act the way we do.

At the end of the day, though, I think we’ll be hard-pressed to deny that it’s not the brain but we humans who have long since been under a moral compunction to explain ourselves … as caused by something else. At the turn of the twentieth-century my favorite snarky philosopher Friedrich Nietzsche wrote that “… the psychological necessity of a belief in causality lies in our inability to imagine an event without an intention.”[3] It would certainly seem that during the more than one hundred years since, we have been grooming The Brain as some version of precisely that — an autonomous agent with intention that causes us to perceive, to think, to feel and (ironically) to have intent.

However. Perceiving, thinking, feeling, having intent — we can explain them in any way we’d like but they do not happen because of our explanations of them! We are free to use neuroscience to explain why we feel that the stove is hot, but our experience of hot stoves is not brought about or made possible by neuroscience. Experience precedes explanation. To assume otherwise is to mistake our ideas about reality for reality itself.

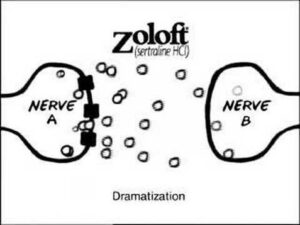

Such confusion is what in recent history pharmaceutical companies have exploited through brain-based explanations of mental illness, especially in the U.S. where they are allowed to advertise directly to consumers. The commercials for Zoloft (sertraline), one of the most commercially successful antidepressants of all time, featured an animated cartoon of nerve cells in the brain:

Notice the caption: “Dramatization.” Why is it there? By drawing our attention to the obvious fact that the cartoon is not real, the caption cleverly insinuates that something in fact real is represented here. Pfizer, the company that makes Zoloft, would have us believe that, despite being a simple cartoon, what we are witnessing is not a fabrication but a dramatization of reality. The commercial’s voiceover expresses a relatedly peculiar relationship to what’s real: “While the cause is unknown, depression may be related to an imbalance of natural chemicals between nerve cells in the brain. Prescription Zoloft works to correct this imbalance.” This language moves from uncertainty (“the cause is unknown”) to provisional certainty (“depression may be related to …”) to certainty (“Zoloft works …”). It’s not much of a leap to see how feeling better on Zoloft—or even deciding to take it in the first place—could be explained and justified as that depression is the brain malfunctioning.

Drug marketers leverage the circularity of mistaking explanation for reality, like in this quote from the industry’s first professional magazine devoted to direct-to-consumer advertising:

But what does it mean to talk about a drug ‘working’ in the first place? The language of “work[ing] to correct” in the Zoloft voiceover hints at an ethical framework — after all, only an error can be corrected, and, properly speaking, only humans make errors. But in a clever sleight of hand the ethical burden of correcting an error is transferred to the drug itself, whose job is not that of mechanical functioning but of purpose, of intent, which maybe resembles the so-called Protestant work ethic that Max Weber wrote about: It’s not that Zoloft works by correcting the chemical imbalance, it works to correct the imbalance — as in moving towards a goal, fulfilling a calling, like a dutiful laborer clocking in. Note, too, that the brain chemicals worked on in the Zoloft commercial are “natural,” which insinuates that there’s nothing wrong in the sense of morally deviant about depression. The conclusion is that, if we humans are morally absolved for being depressed, and if we are under no ethical obligation to work on ourselves, we only have to let Zoloft do its job, which is to correct the error from which we suffer but for which we are no longer at fault.

Of course, in our everyday speak, to talk about drugs working is to mean that they relieve symptoms. In the case of antidepressants like Zoloft, whether or not they relieve symptoms according to the clinical standards of the allopathic (drugs and surgery based) medical community has been under debate for over a decade as statisticians pour over the original clinical trial data by which the drugs were approved by the Food & Drug Administration. Some of these analyses have concluded that some of the most commercially successful antidepressants, including Zoloft, are not meaningfully different from a placebo (symptom relief without the drug). Drug makers see the placebo effect as a problem, since it threatens their ability to prove clinically that their drugs work. Drug marketers, on the other hand, see the placebo effect as an opportunity; they hope—through advertising—to bolster consumers’ real-life experiences of antidepressants. To feel better on a drug because of a commercial would be literal sensationalism, if we take sensationalize in one of its earliest meanings — to shock the senses through melodrama.

Today when we hear the word sensationalism we are likely to think of tabloid news: lurid stories grabbing attention through physically large headlines and incredible pictures (whether hardcopy or online), at least compared to standard news. In other words, tabloids have the semblance of news, but they are designed to stupefy, and to be consumed quickly and uncritically. It turns out that “tabloid” first referred to pills. In the 1880s the pharmaceutical company Burroughs, Wellcome, & Co. coined and trademarked the word to name what today we call tablets — individualized doses of drug compounds pressed into small, usually ovoid shapes (as distinguished from powders that have to be measured out). Burroughs took a rival drug company to court in 1902 over use of the proprietary word. The case was thrown out, largely because of the fact that in the intervening years “tabloid” had become a popular word even outside of medicine. The legal ruling observed that “… the word has been so applied generally with reference to the notion of a compressed form or dose of anything.”[5] We can understand today’s drug advertising as an outcome of the dual history of the tabloid as compressed drug and the tabloid as compressed news. Both are fast and easy to swallow.

[2] Feldman Barrett, L. (2020). Your Brain Is Not for Thinking. New York Times. Nov. 23.

[3] Nietzsche, F. (2017 [1901]). The Will to Power. Penguin Classics. (section 627, p. 358).

[4] Erskine K. (2002). The power of positive branding. DTC Perspectives. Mar-Apr:16.

[5] The Oxford English Dictionary. (usage example under “tabloid”)